System Management

Docker 기반 Slurm Cluster 구성하기

kogun82

2024. 5. 21. 13:50

docker-compose를 이용해서 slurm cluster 구성 (연산 노드 자원 설정)

1. docker-compose yml 파일 작성

services:

slurmjupyter:

image: rancavil/slurm-jupyter:19.05.5-1

hostname: slurmjupyter

user: admin

volumes:

- shared-vol:/home/admin

- /Users/kogun82/Documents/docker/cluster/store:/BiO

ports:

- 8888:8888

- 3030:3030

slurmmaster:

image: rancavil/slurm-master:19.05.5-1

deploy:

resources:

limits:

memory: 196G

hostname: slurmmaster

user: admin

volumes:

- shared-vol:/home/admin

- /Users/kogun82/Documents/docker/cluster/store:/BiO

- /Users/kogun82/Documents/docker/cluster/slurm.conf:/etc/slurm-llnl/slurm.conf

# 연산 노드의 자원 설정 추가

environment:

- /Users/kogun82/Documents/docker/cluster/slurm.conf:/etc/slurm-llnl/slurm.conf

ports:

- 6817:6817

- 6818:6818

- 6819:6819

slurmnode1:

image: rancavil/slurm-node:19.05.5-1

deploy:

resources:

limits:

memory: 196G

hostname: slurmnode1

user: admin

volumes:

- shared-vol:/home/admin

- /Users/kogun82/Documents/docker/cluster/store:/BiO

environment:

- SLURM_NODENAME=slurmnode1

links:

- slurmmaster

slurmnode2:

image: rancavil/slurm-node:19.05.5-1

deploy:

resources:

limits:

memory: 196G

hostname: slurmnode2

user: admin

volumes:

- shared-vol:/home/admin

- /Users/kogun82/Documents/docker/cluster/store:/BiO

environment:

- SLURM_NODENAME=slurmnode2

links:

- slurmmaster

slurmnode3:

image: rancavil/slurm-node:19.05.5-1

deploy:

resources:

limits:

memory: 196G

hostname: slurmnode3

user: admin

volumes:

- shared-vol:/home/admin

- /Users/kogun82/Documents/docker/cluster/store:/BiO

environment:

- SLURM_NODENAME=slurmnode3

links:

- slurmmaster

volumes:

shared-vol:

2. slurm.conf 파일 작성

# slurm.conf file generated by configurator.html.

# Put this file on all nodes of your cluster.

# See the slurm.conf man page for more information.

#

SlurmctldHost=slurmmaster

#

MpiDefault=none

ProctrackType=proctrack/linuxproc

ReturnToService=1

SlurmctldPidFile=/var/run/slurmctld.pid

SlurmctldPort=6817

SlurmdPidFile=/var/run/slurmd.pid

SlurmdPort=6818

SlurmdSpoolDir=/var/spool/slurmd

SlurmUser=root

StateSaveLocation=/var/spool

SwitchType=switch/none

TaskPlugin=task/affinity

TaskPluginParam=Sched

# TIMERS

InactiveLimit=0

KillWait=30

MinJobAge=300

SlurmctldTimeout=120

SlurmdTimeout=300

Waittime=0

# SCHEDULING

SchedulerType=sched/backfill

SelectType=select/cons_res

SelectTypeParameters=CR_Core

# LOGGING AND ACCOUNTING

AccountingStorageType=accounting_storage/none

AccountingStoreJobComment=YES

ClusterName=cluster

JobCompType=jobcomp/none

JobAcctGatherFrequency=30

JobAcctGatherType=jobacct_gather/none

SlurmctldDebug=error

SlurmctldLogFile=/var/log/slurm-llnl/slurmctld.log

SlurmdDebug=error

SlurmdLogFile=/var/log/slurm-llnl/slurmd.log

# COMPUTE NODES

NodeName=slurmnode[1-10] CPUs=12 RealMemory=4096 State=UNKNOWN #cpu, mem 설정

PartitionName=slurmpar Nodes=slurmnode[1-10] Default=YES MaxTime=INFINITE State=UP

3. docker-compose 시작

docker-compose up -d

4. docker-compose 실행 확인

docker-compose ps

5. slrum node 확인

scontrol show node

6. sinfo 명령어로 노드 상태 확인 시 idle 상태가 아닌 경우 실행 가능한 상태로 변경

sudo scontrol update nodename=slurmnode1 state=resume

7. 테스트 python 코드

#!/usr/bin/env python3

import time

import os

import socket

from datetime import datetime as dt

if __name__ == '__main__':

print('Process started {}'.format(dt.now()))

print('NODE : {}'.format(socket.gethostname()))

print('PID : {}'.format(os.getpid()))

print('Executing for 15 secs')

time.sleep(15)

print('Process finished {}\n'.format(dt.now()))

8. 작업 제출 bash shell 코드

#!/bin/bash

#

#SBATCH --job-name=test

#SBATCH --output=result.out

#SBATCH --ntasks=1

#SBATCH --cpus-per-task=10

#SBATCH --mem=1G

sbcast -f test.py /tmp/test.py

srun python3 /tmp/test.py

9. 작업 제출

sbatch job.sh

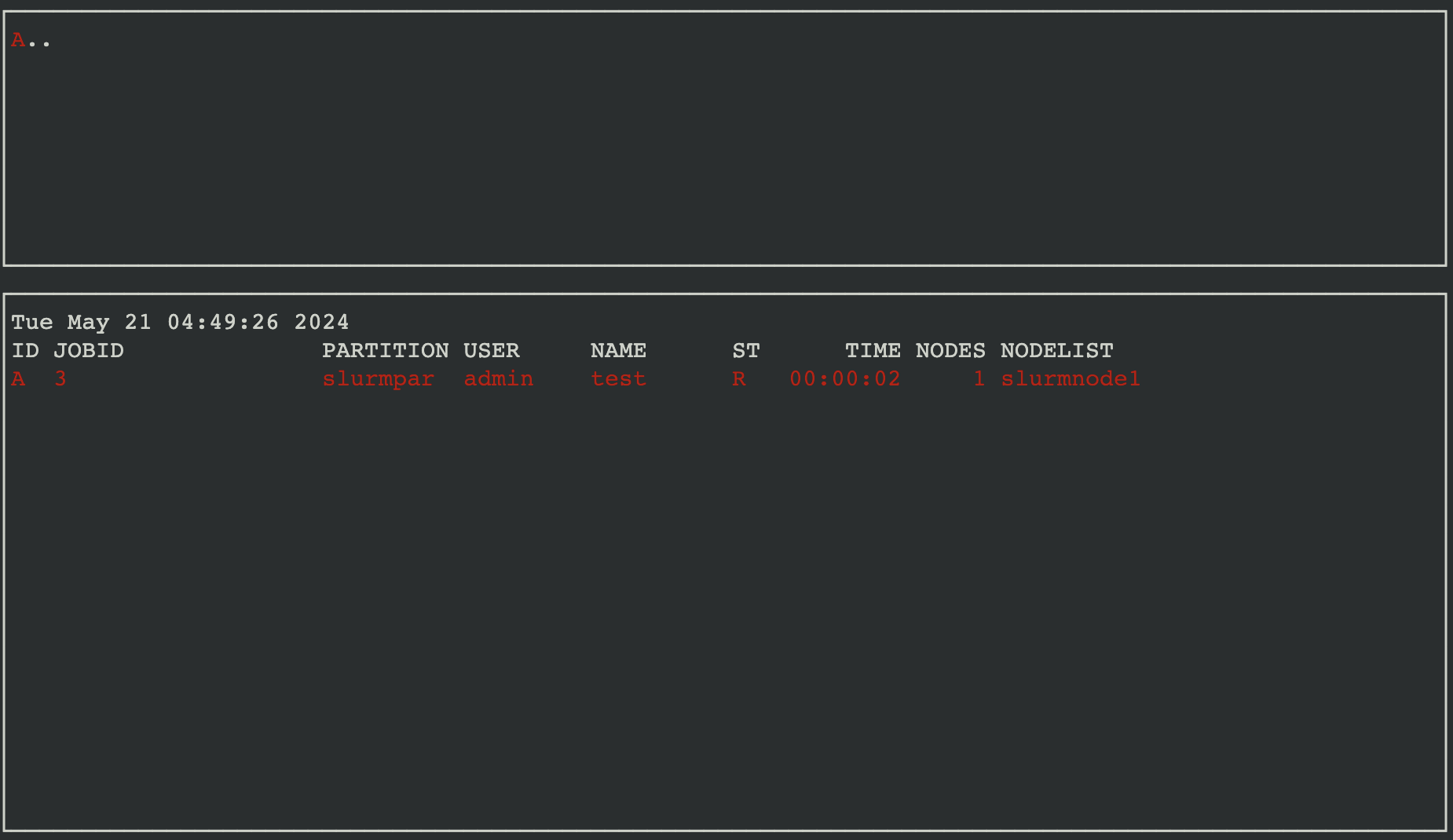

10. 작업 상태 확인

smap

반응형